MindBigData

The

Visual "MNIST" of Brain

Digits (2021-2023)

CustomCap64 Version 0.

Files available for download:

| DataBase | File | Zip size | File size | Date | MNIST Digits | Version |

| CustomCap64-v0.016 | MindBigDataVisualMnist2022-Cap64v0.016.zip | 215 Mb (225,740,659 bytes) | 518 MB (543,918,855 bytes) | 12/27/2022 | 1,162 | v 0.016 |

| CustomCap64Morlet-v0.016 | MindBigDataVisualMnist2022-Cap64v0.016M.zip | 3 Gb (3,251,646,393 bytes) | 3.5 Gb (3,569,195,139 bytes) | 12/27/2022 | 1,162 | v 0.016M |

| Muse2-v0.17 | MindBigDataVisualMnist2021-Muse2v0.17.zip | 709 Mb (743,459,783 bytes) | 2.4 Gb (2,551,653,293 bytes) | 01/12/2022 | 18,000 | v 0.17 |

For the Custom Cap 64 Channels device: 59,494,400 EEG Data Points.

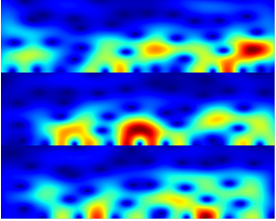

The DataBase named CustomCap64Morlet-v___ comes also with

a Morlet wavelet transform scalogram PNG image for all the EEG

data for the Custom Cap 64

Each color PNG file is 400 pixels width by 6,400 pixels height, using the (x) axis as “time” from left to right and 100 (y) pixels for the resulting Morlet wavelet data for each channel

in the this channel order "FP1, FPz, FP2, AF3, AFz, AF4, F7, F5, F3, F1, Fz, F2, F4, F6, F8, FT7, FC5, FC3, FC1, FCz, FC2, FC4, FC6, FT8, T7, C5, C3, C1, Cz, CCPz, C2, C4, C6, T8, TP7, CP5, CP3, CP1, CPz, CP2, CP4, CP6, TP8, P7, P5, P3, P1, Pz, P2, P4, P6, P8, PO7, PO5, PO3, POz, PO4, PO6, PO8, CB1, O1, Oz, O2 and CB2." from top of the image to the bottom.

The following image is a sample of just 3 EEG channels of the 64 that are included in each PNG image:

For the Muse2 device: 73,728,000 EEG Data Points. 55 Million PPG Datapoints & 55 Million Accelerometer Datapoints & 55 Million Gyroscope Datapoints

Feel free to test any machine learning, deep learning or whatever algorithm you think it could fit, we only ask for acknowledging the source and please let us know of your performance!

In this new dataset for Muse 2 other bio-signals have been included beyond EEG, to foster the use of multimodal data in training algorithms, since it could help different lines of research.

Muse 2 January 2022 Update: Due to the EEG signal noise detected in some channels of Muse2 recordings, subsets have been created leaving only the best signals still in raw format, one with 2 channels "Cut2" TP9 & TP10 and other with 3 channels "Cut3" TP9 AF7 & TP10

| DataBase | File | Zip size | File size | Date | MNIST Digits | Version |

| Muse2-v0.16Cut2 | MindBigDataVisualMnist2021-Muse2v0.16Cut2.zip | 189 Mb (198,358,166 bytes) | 659 Mb (691,242,736 bytes) | 01/09/2022 | 11,387 | v 0.16Cut2 |

| Muse2-v0.16Cut3 | MindBigDataVisualMnist2021-Muse2v0.16Cut3.zip | 20,9 Mb (21,917,547 bytes) | 74,8 Mb (78,476,950 bytes) | 01/09/2022 | 1,184 | v 0.16Cut3 |

The original Muse2 datasets with the 4 EEG channels

above can still be used in many cases with further preprocessing.

New EEG

Headsets will be added through 2023.

FILE FORMAT:

The

data is stored in a very simple text format (csv like, all comma separated) including:

[dataset]:

a text pointing to the

original Yann LeCun MNIST source type, can be "TRAIN" or "TEST", related

to the 60,000 train digits and 10,000 test digits.

[origin]

1

integer,

used to reference the Yann LeCun MNIST location of the original digits in the

source data files from 0-59,999 for train 0-9,999 for test or -1 to indicate

black signal (meaning not from the original MNIST datasets)

[digit_event]: 1 integer with the original MNIST label of the image from 0 to 9 or -1 to indicate black signal (no digit shown)

[original_png]: 784 integers (comma separated), with the original pixel intensities from the Yann LeCun MNIST from the source png files shown, each pixel can have a value from 0 to 255, (for black signal all will be 0s) 784 comes from from (28x28) since it is single channel square image, flattened

[timestamp]: 1 Unix Like timestamp for initial time of catpture of the signals for this digit capture

[EEGdata]:

For Custom

Cap 64 Channels

25,600 floating point (comma separated), sequentially 400 for each of the 64 EEG Channels raw signals

(2secs at 200hz) in this order FP1, FPz, FP2, AF3, AFz, AF4, F7, F5, F3, F1, Fz, F2, F4, F6, F8, FT7, FC5,

FC3, FC1, FCz, FC2, FC4, FC6, FT8, T7, C5, C3, C1, Cz, CCPz, C2, C4, C6, T8, TP7, CP5, CP3, CP1,

CPz, CP2, CP4, CP6, TP8, P7, P5, P3, P1, Pz, P2, P4, P6, P8, PO7, PO5, PO3, POz, PO4, PO6, PO8,

CB1, O1, Oz, O2 and CB2.

For Muse2

512

floating point

(comma separated) EEG - TP9 channel raw signal (2secs

at 256hz), followed by

512

floating point

(comma separated) EEG - AF7 channel raw signal (2secs

at 256hz), followed by

512

floating point

(comma separated) EEG - AF8 channel raw signal (2secs

at 256hz), followed by

512

floating point

(comma separated) EEG - TP10 channel raw signal (2secs at 256hz)

For Muse2 Cut2 ( only TP9 &

TP10)

For Muse2 Cut3 ( only TP9, AF7 & TP10)

| For Muse2 (only) | ||

| 512 floating point (comma separated) PPG1 ambient channel raw signal (2secs at 256hz), followed by | ||

| 512 floating point (comma separated) PPG2 infrared channel raw signal (2secs at 256hz), followed by | ||

| 512 floating point (comma separated) PPG3 red channel raw signal (2secs at 256hz) |

| For Muse2 (only) | ||

| 512 floating point (comma separated) Accelerometer X channel raw signal (2secs at 256hz), followed by | ||

| 512 floating point (comma separated) Accelerometer Y channel raw signal (2secs at 256hz), followed by | ||

| 512 floating point (comma separated) Accelerometer Z channel raw signal (2secs at 256hz) |

| For Muse2 (only) | ||

| 512 floating point (comma separated) Gyroscope X channel raw signal (2secs at 256hz),followed by | ||

| 512 floating point (comma separated) Gyroscope Y channel raw signal (2secs at 256hz),followed by | ||

| 512 floating point (comma separated) Gyroscope Z channel raw signal (2secs at 256hz) |

For Custom Cap 64 Channels, in total there are 26,388 values coma separated per row

For Muse2 data, in total there are 7,444 values coma separated per row

(6,932 for Muse2 Cut3 & 6,420 for Muse2 Cut2)

There

are no headers in the files

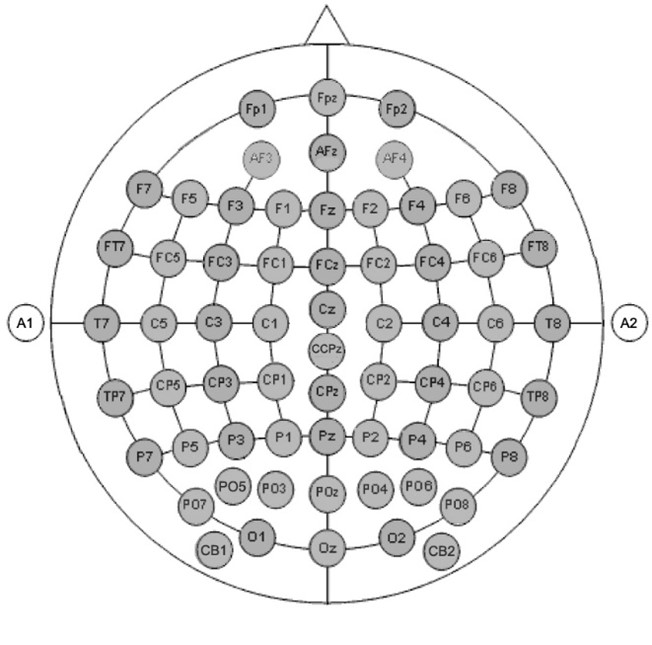

The Custom 64 Channels Cap follows the next 10/20 distribution:

RELATED RESEARCH, CITATIONS & RESULTS by 3rd parties (Using the previous "MNIST" of brain digits dataset):

- Giving sense to EEG records ( course IFT6390 "machine learning" by Pascal Vincent from MILA) by Amin Shahab, Marc Sayn-Urpar, René Doumbouya, Thomas George & Vincent Antaki.

- Contribution aux décompositions rapides des matrices et tenseurs , Viet-Dung NGUYEN THÈSE UNIVERSITÉ D’ORLÉANS Nov-16th-2016

- Repositórios de dados de pesquisa na Espanha: breve análise.,Fernanda Passini MORENO, Universidade de Brasília Jun-2017

- Fast learning of scale-free networks based on Cholesky factorization, Vladislav Jelisav?i?, Ivan Stojkovic, Veljko Milutinovic, Zoran Obradovic May-2018

- STRUCTURED LEARNING FROM BIG DATA BASED ON PROBABILISTIC GRAPHICAL MODELS, Vladislav Jelisav?i? UNIVERSITY OF BELGRADE SCHOOL OF ELECTRICAL ENGINEERING May-2018

- Combination of Wavelet and MLP Neural Network for Emotion Recognition System, Phuong Huy Nguyen,Thai Nguyen University of Technology (TNUT) & Thu May Duong ,Thi Mai Thuong Duong ,Thu Huong Nguyen University of Information and Communication Technology Vietnam Nov-2018

- A Deep Evolutionary Approach to Bioinspired Classifier Optimisation for Brain-Machine Interaction, Jordan J. Bird , Diego R. Faria, Luis J. Manso, Anikó Ekárt, and Christopher D. Buckingham, School of Engineering and Applied Science, Aston University, Birmingham, UK Mar-2019

- Novel joint algorithm based on EEG in complex scenarios, Dongwei Chen, Weiqi Yang, Rui Miao, Lan Huang, Liu Zhang, Chunjian Deng & Na Han School of Business, Beijing Institute of Technology, Zhuhai, China Aug-2019

- HHHFL: Hierarchical Heterogeneous Horizontal Federated Learning for Electroencephalography,Dashan Gao,Ce Ju,Xiguang Wei, Yang Liu,Tianjian Chen and Qiang Yan, Hong Kong University of Science and Technology, 2AI Lab, WeBank Co. Ltd. Sep-2019

- Universal EEG Encoder for Learning Diverse Intelligent Tasks,Baani Leen Kaur Jolly, Palash Aggrawal, Surabhi S Nath, Viresh Gupta, Manraj Singh Grover, Rajiv Ratn Shah, MIDAS Lab, IIIT-Delhi Nov-2019

- Deep Learning based Recognition of Visual Digit Reading Using Frequency Band of EEG,Jaesik Kim , Jeongryeol Seo , and Kyungah Son, Ajou University Republic of Korea. 2019

- Stanford CS230 - Group Project Final Report,Roman Pinchuk and Will Ross 2020

- Mental State Recognition and Recommendation of Aids to Stabilize the Mind Using Wearable EEG,M.W.A. Aruni Wijesuriya, University of Colombo School of Computing 2020

- Generating the image viewed from EEG signals,Gaffari ÇEL?K, Muhammed Fatih 2020

- EEG-Based Emotion Classification for Alzheimer’s Disease Patients Using Conventional Machine Learning and Recurrent Neural Network Models,Mahima Chaudhary, Sumona Mukhopadhyay, Marin Litoiu, Lauren E Sergio, Meaghan S Adams Aug-2020

- Analysis of Multi-class Classification of EEG Signals Using Deep Learning,Dipayan Das, Tamal Chowdhury and Umapada Pal, National Institute of Technology, Durgapur, India Oct-2020

- Understanding Brain Dynamics for Color Perception Using Wearable EEG Headband,Jungryul Seo, Teemu H. Laine, Gyuhwan Oh, Kyung-Ah Sohn Dec-2020

- Image-based Deep Learning Approach for EEG Signal Classification, Haneul Yoo, Jungyul Seo, Kyung-Ah Sohn Ajou University. 2020

- Frequency Band and PCA Feature Comparison for EEG Signal Classification, Wayan Pio Pratama, Made Windu Antara Kesiman, Gede Aris Gunadi, Apr-2021

- Toward lightweight fusion of AI logic and EEG sensors to enable ultra edge-based EEG analytics on IoT devices, Tazrin Tahrat, May-2021

- Deep Learning in EEG: Advance of the Last Ten-Year Critical Period,Shu Gong, Kaibo Xing, Andrzej Cichocki, Junhua Li May-2021

- Convolutional Neural Network-Based Visually Evoked EEG Classification Model on MindBigData,Nandini Kumari, Shamama Anwar,Vandana Bhattacharjee Jun-2021

- Using Convolutional Neural Networks for EEG analysis, Arina Kazakova, Swarthmore College, Pennsylvania, United States Jul-2021

- Emotional behavior analysis based on EEG signal processing using Machine Learning: A case study,Salim KLIBI, Makram Mestiri, Imed Riadh FARAH, National School of Computer Sciences Tunis, Tunisia Jul-2021

- Visual Brain Decoding for Short Duration EEG Signals,Rahul Mishra, Krishan Sharma, Arnav Bhavsar Aug-2021

- Emotion detection using EEG signals based on Multivariate Synchrosqueezing Transform and Deep Learning,Tugba Ergin; Mehmet Akif Ozdemir; Onan Guren Dec-2021

- Quality analysis for reliable complex multiclass neuroscience signal classification via electroencephalography, Ashutosh Shankhdhar, Pawan Kumar Verma, Prateek Agrawal, Vishu Madaan, Charu Gupta Jan-2022

- A Combinational Deep Learning Approach to Visually Evoked EEG-Based Image Classification, Nandini Kumari, Shamama Anwar, Vandana Bhattacharjee Jan-2022

- TOWARD RELIABLE SIGNALS DECODING FOR ELECTROENCEPHALOGRAM: A BENCHMARK STUDY TO EEGNEX,Xia Chen, Xiangbin Tengb, Han Chenc, Yafeng Panc & Philipp Geyerd Leibniz University Hannover, Germany, Department of Education and Psychology, Freie Universität Berlin, Germany & Department of Psychology and Behavioral Sciences, Zhejiang University, China Jul-2022

- A Review on EEG Data Classification Methods for Brain–Computer Interface,Vaibhav Jadhav, Namita Tiwari and Meenu Chawla, Maulana Azad National Institute of Technology, Bhopal, India Sep-2022

- A survey of electroencephalography open datasets and their applications in deep learning,Alberto Nogales, Álvaro García-Tejedor, Universidad Francisco de Vitoria Sep-2022

- Fortifying Brain Signals for Robust Interpretation,Kanan Wahengbam; Kshetrimayum Linthoinganbi Devi; Aheibam Dinamani Singh, Indian Institute of Technology Guwahati Guwahati, India Nov-2022

- Visually evoked brain signals guided image regeneration using GAN variants,Nandini Kumari, Manipal Institute of Technology , Shamama Anwar, Vandana Bhattacharjee, Sudip Kumar Sahana Birla Institute of Technology Mar-2023

- EEG-based classification of imagined digits using a recurrent neural network,Nrushingh Charan Mahapatra and Prachet Bhuyan Apr-2023

- Emotions Classification from EEG Waves Using Deep Learning,Vrachnaki Ioanna University Of Wesrtern Attica 2023

- RECOGNITION OF HUMAN EMOTIONS BASED ON EEG BRAINWAVE SIGNALS USING MACHINE LEARNING TECHNIQUES-A COMPARATIVE STUDY,Saba Tahseen, Ajit Dantii,, Christ University, Bengaluru, India, 2023

- Emotion Detection Using Deep Normalized Attention-Based Neural Network and Modified-Random Forest Shtwai Alsubai, College of Computer Engineering and Sciences, Prince Sattam bin Abdulaziz University, Saudi Arabia 2023

- Flexible In-Ga-Zn-N-O synaptic transistor for ultralow-power neuromorphic computing and EEG-based brain-computer interfaces,Shuangqing Fan, Enxiu Wu, Minghui Cao, Ting Xu,Tong Liu, Lijun Yang,Jie Su and Jing Liu Jul-2023

- Decoding Thoughts with Deep Learning: EEG-Based Digit Detection using CNNs,Diganta Kalita Aug-2023

- Neural decoding of InterAxon Muse data using a recurrent convolutional neural network,Andrew Perez, Nov-2023

- Salient Arithmetic Data Extraction from Brain Activity via an Improved Deep Network,Nastaran Khaleghi, Shaghayegh Hashemi, Sevda Zafarmandi Ardabili, Sobhan Sheykhivand and Sebelan Danishvar, Dec-2023

- A Review on:EEG-Based Brain-Computer Interfaces for Imagined Speech Classification,Pravin V. Dhole, Deepali G Chaudhary, Vijay D. Dhangar, Sulochana D. Shejul, Dr. Bharti W. Gawali, May-2024

- An Analysis of EEG Signal Classication for Digit Dataset,Asif Iqbal, Arpit Bhardwaj, Ashok Kumar Suhag, Manoj Diwakar, Anchit Bijalwan, Aditi Bhardwaj, Madhushi Verma, May-2024

- EEG Signal Analysis for Numerical Digit Classification: Methodologies and Challenges, Augoustos Tsamourgelis and Adam Adamopoulos, Feb-2025

RELATED RESEARCH, CITATIONS & RESULTS by 3rd parties (Using the previous "IMAGENET" of the brain dataset):

- Inferencia de la Topologia de Grafs,Tura Gimeno Sabater, UPC 2020

- Understanding Brain Dynamics for Color Perception using Wearable EEG headband Mahima Chaudhary, Sumona Mukhopadhyay, Marin Litoiu, Lauren E Sergio, Meaghan S Adams York University, Toronto, Canada 2020

- Developing a Data Visualization Tool for the Evaluation Process of a Graphical User Authentication System Loizos Siakallis , UNIVERSITY OF CYPRUS USA 2020

- Object classification from randomized EEG trials Hamad Ahmed, Ronnie B Wilbur,Hari M Bharadwaj and Jeffrey Mark, Purdue University CVPR 2021 USA 2021

- Evaluating the ML Models for MindBigData (IMAGENET) of the Brain Signals Priyanka Jain, Mayuresh Panchpor, Saumya Kushwaha and Naveen Kumar Jain, Artificial Intelligence Group, CDAC, Delhi 2022

- Pattern recognition and classification of EEG signals for identifying the human-specific behaviour Gunavathie MA, S. Jacophine Susmi, Panimalar Engineering College Chennai, University College of Engineering, Tindivanam 2023

- Reconstructing Static Memories from the Brain with EEG Feature Extraction and Generative Adversarial Networks Matthew Zhang Westlake High School, USA, and Jeremy Lu Saratoga High School, USA 2023

- REEGNet: A resource efficient EEGNet for EEG trail classification in healthcare Khushiyant, | Mathur, Vidhu | Kumar, Sandeep | Shokeen, Vikrant 2024

- Decoding Brain Signals from Rapid-Event EEG for Visual Analysis Using Deep LearningMadiha Rehman ,Humaira Anwer, Helena Garay,Josep Alemany-Iturriaga,Isabel De la Torre Díez,Hafeez ur Rehman Siddiqui and Saleem Ullah 2024

- Alljoined1 -- A dataset for EEG-to-Image decoding Jonathan Xu, Bruno Aristimunha, Max Emanuel Feucht, Emma Qian, Charles Liu, Tazik Shahjahan, Martyna Spyra, Steven Zifan Zhang, Nicholas Short, Jioh Kim, Paula Perdomo, Ricky Renfeng Mao, Yashvir Sabharwal, Michael Ahedor Moaz Shoura, Adrian Nestor 2024

RELATED RESEARCH, CITATIONS & RESULTS by 3rd parties, exploring MindBigData 2022 A Large Dataset of Brain Signals and or Visual Mnist Datasets:

- BCI interface for word recognition in EEG signals, Pablo Martín Puerto , Universidad de Sevilla 2023

- An Analysis of EEG Signal Classication for Digit Dataset,Asif Iqbal,Arpit Bhardwaj,Ashok Kumar Suhag,Manoj Diwakar, Anchit Bijalwan, Aditi Bhardwaj, Madhushi Verma May-2024

- Alljoined1 - A dataset for EEG-to-Image decoding ,Jonathan Xu , Bruno Aristimunha , Max Emanuel Feucht,Emma Qian , Charles Liu , Tazik Shahjahan , Martyna Spyra, Steven Zifan Zhang, Nicholas Short, Jioh Kim, Paula Perdomo, Ricky Renfeng Mao, Yashvir Sabharwal, Michael Ahedor, Moaz Shoura, Adrian Nestor May-2024

- An Assessment of Modeling Dynamics in EEG Signals Using Learned Sparse Nonlinear Representations,Dragos Constantin Popescu; Andrei Mateescu; Ioana Livia Stefan; Ioana Miruna Vlasceanu; Ioan Stefan Sacala; Ioan Dumitrache Jun-2024

- EIT-1M: One Million EEG-Image-Text Pairs for Human Visual-textual Recognition and More,Xu Zheng1, Ling Wang1, Kanghao Chen1, Yuanhuiyi Lyu1, Jiazhou Zhou1 Lin Wang Jul-2024

- The Progress and Prospects of Data Capital for Zero-Shot Deep Brain Computer Interfaces,Wenbao Ma, Teng Ma,Daniel Organisciak, Jude E. T. Waide, Xiangxin Meng and Yang Long Jan-2025

- Thought2Text: Text Generation from EEG Signal using Large Language Models (LLMs),Abhijit Mishra, Shreya Shukla , Jose Torres, Jacek Gwizdka, Shounak Roychowdhury Feb-2025

- Removing neural signal artifacts with autoencoder-targeted adversarial transformers (AT-AT), Benjamin J. Choi Feb-2025

- Geometric Machine Learning on EEG Signals, Benjamin J. Choi Feb-2025

- Generative Adversarial Network for Image Reconstruction from Human Brain Activity, Tim Tanner and Vassilis Cutsuridis Mar-2025

Contact us if you need any more info.

May

10th 2025

David

Vivancos

vivancos@vivancos.com

This MindBigData The Visual "MNIST" of Brain Digits is made available under the Open Database License: http://opendatacommons.org/licenses/odbl/1.0/. Any rights in individual contents of the database are licensed under the Database Contents License: http://opendatacommons.org/licenses/dbcl/1.0/